On many unix like systems, tar is a widely used tool to package and compress files, almost built-in in the all common Linux and BSD distribution, however, tar always spends a lot of time on file compression, because the programs itself doesn’t support multi-thread compressing, but fortunately, tar supports to use specified external program to compress file(s), which means we can use the programs support multi-thread compressing with higher speed!

From the tar manual (man tar), we can see:

-I, –use-compress-program PROG

filter through PROG (must accept -d)

With parameter -I or --use-compress-program, we can select the extermal compressor program we’d like to use.

The three tools for parallel compression I will use today, all can be easy installed via apt install under Debian/Ubuntu based GNU/Linux distributions, here are the commands and corresponding apt package name, please note that new versions of Ubuntu and Debian no longer have pxz package, but pixz can do the similar thing:

- gz:

pigz - bz2:

pbzip2 - xz:

pxz, pixz

Originally commands to tar with compression will be look like:

- gz:

tar -czf tarball.tgz files - bz2:

tar -cjf tarball.tbz files - xz:

tar -cJf tarball.txz files

The multi-thread version:

- gz:

tar -I pigz -cf tarball.tgz files - bz2:

tar -I pbzip2 -cf tarball.tbz files - xz:

tar -I pixz -cf tarball.txz files - xz:

tar -I pxz -cf tarball.txz files

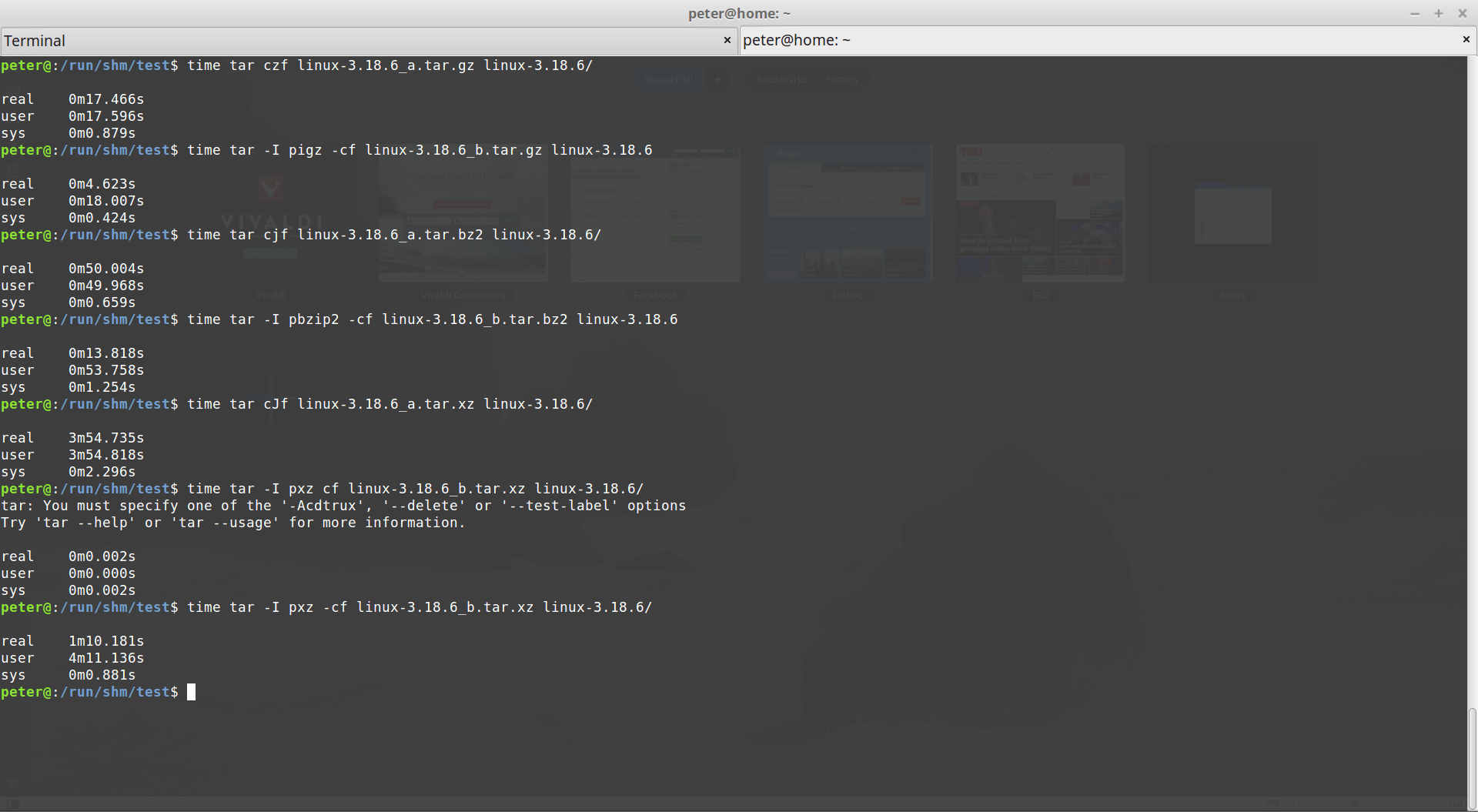

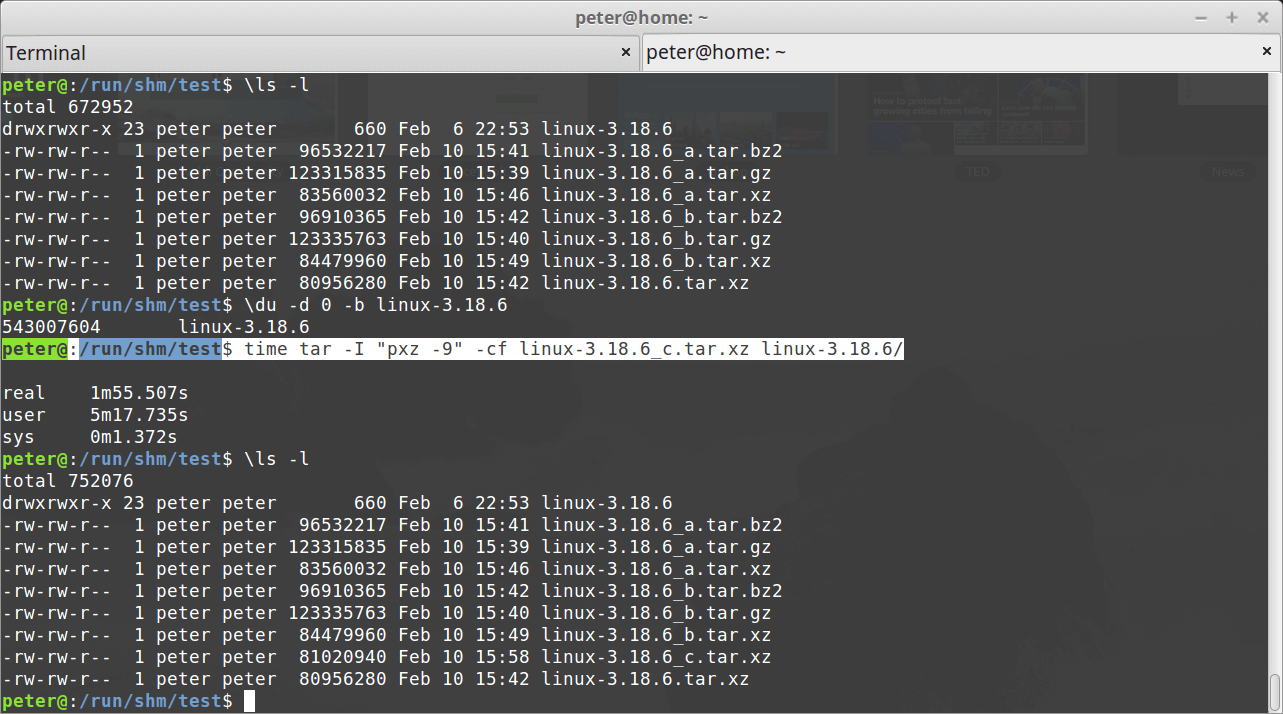

I am going to use Linux kernel v3.18.6 as compression example, threw the whole directory on the ramdisk to compress them, and then compare the difference!

(PS: CPU is Intel(R) Xeon(R) CPU E3-1220 V2 @ 3.10GHz, 4 cores, 4 threads, 16GB ram)

Result comparison:

Time spent:

. gzip bzip2 xz

Single-thread 17.466s 50.004s 3m54.735s

Multi-thread 4.623s 13.818s 1m10.181s

How faster ? 3.78x 3.62x 3.34x

Because I didn’t specify the compressor parameter, just let them use the default compress level, so the result file size may be a little bit different, but quite close, we still can add parameters to the external compression project like this: tar -I "pixz -9" -cf tarball.txz files, just quote the command with its argument, which is also pretty easy.

With parameter -9 to increase the compress level, it might need more memory when compressing, the result will become 81020940 bytes but not 84479960 bytes, so we can save additional 3.3 mega bytes! (also spent 40 more secs, you decide it!)

This is very useful for me!!!